Managing and storing big data generated by businesses is crucial for modern enterprise operations. The data cloud applies technology to solve complex data problems that exist within a business such as availability, performance, and access. Once the business simplifies data handling, it helps lower the entry barriers by providing a consistent information experience anywhere around the globe.

Businesses, therefore, need an easy-to-use, reliable data platform that can handle massive volumes of big data at high speed. Snowflake is a Software as a Service (SaaS) offering that provides a single platform for data warehousing, data lakes, and data engineering that supports a multi-cloud infrastructure environment. The Snowflake Data Cloud supports a wide range of solutions and can handle diverse workloads, including data lakes, data science, and information sharing and exchange.

According to Statista, Snowflake has grown to become one of the world’s largest cloud vendors by revenue as of 2022. Snowflake was founded in 2012 and went public in 2014.

Snowflake Data Cloud Service

The Snowflake data platform is built as a layer over Amazon Web Services, Microsoft Azure, and Google cloud infrastructure. With Snowflake, businesses do not have to invest in additional hardware, software installations, etc. It can be easily set up and can be maintained using in-house services.

Snowflake data cloud services are highly scalable. It functions on a powerful architecture and data-sharing capabilities splitting costs between storage and computation. This is beneficial for organizations with high storage demands but with fewer CPU cycles and vice versa to meet their needs accordingly.

Snowflake offers data-sharing functionality that allows organizations to quickly share governed and secure data in real time. It comprises of:

- The database storage layer that holds both structured and semi-structured data. It organizes data as per file size, structure, compression, metadata, and statistics.

- The compute layer is a series of virtual warehouses executing data processing tasks required for queries. Now each warehouse, either stand-alone or as a cluster can obtain access to all the data in the storage layer without hampering any resources.

- The cloud services layer uses ANSI SQL and integrates the entire system by removing the need for manual data warehouse management and tuning. This layer includes functions like authentication, infrastructure management, metadata management, query parsing and access control.

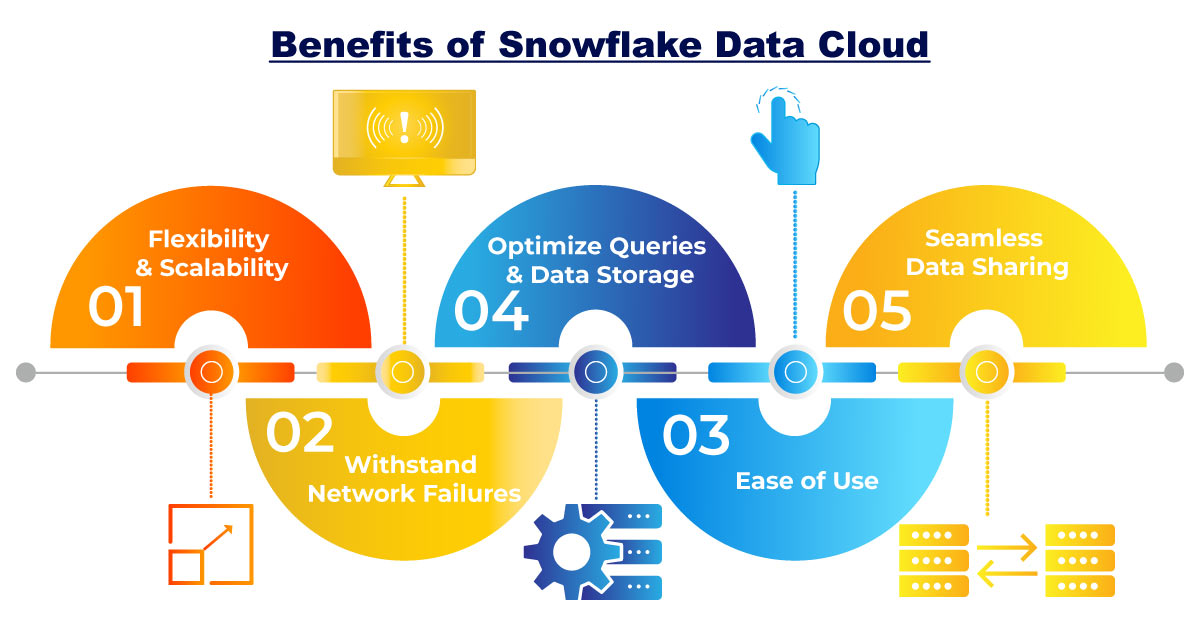

Since Snowflake is specifically built for the cloud, it addresses challenges found in legacy hardware-based data warehouses, such as limited scalability, data transformation issues, and response delays due to high query volumes. Other advantages of Snowflake are listed below.

- Since Snowflake is highly elastic, businesses can easily expand warehouse capabilities to leverage additional computing resources. Later it can be scaled back and accordingly reduce costs for the time it was unused.

- Both structured and semi-structured data can be harnessed for analysis and loaded into the cloud database without the need for conversion or transformation. The platform automatically optimizes how the data is stored and queried.

- Snowflake handles concurrency issues with its unique multi-cluster architecture. This means that queries from one virtual warehouse do not affect the other. Virtual warehouses can be scaled up or down as required. Stakeholders can obtain what they need, when they need it, without waiting for other loading and processing tasks to get completed.

- Data sharing is seamless, and it allows businesses to also share data with non-Snowflake users. It allows the creation of reader accounts directly from the user interface.

- Snowflake is SOC 2 Type 2 certified with additional support for PHI data for HIPAA clients. It is also encrypted across all network communications. It is designed to operate continuously and can withstand component and network failures with minimal impact on customers.

- For businesses with a diverse data ecosystem, this cloud-based data platform offers nearly infinite expansion, scalability, and ease of use.

However, without the right strategy for usage and access to sensitive data, the costs involved are compromised as well as the benefits thus mentioned of Snowflake will remain underutilized.

Implementing the Snowflake environment needs to be carried out with full focus as data is expensive to the business. Partner with Gemini Consulting & Services to ensure that the right checks are in place while being mindful of the costs involved. Contact us to understand how we can help you leverage the capabilities of Snowflake to improve efficiency.

Optimize the Performance of Snowflake Data Cloud

The Data Warehouse

- Similar workloads can be grouped to ensure that the configuration settings that impact efficiency are accordingly fixed. Snowflake virtual warehouses come in varying sizes. Credits are calculated based on usage per hour of actively running queries. As a result, the size of the warehouse impacts consumption. Snowflake settings include features like auto-suspend, auto-resume, scaling policy, clusters, and statement timeout. All of these can be adjusted as per the query workload.

- Data SLAs can be clearly set up so that it defines workloads and value delivered. Data logs can be monitored to determine workload type, frequency, typical scan size, etc. Then speak to business stakeholders on expectations of refreshed data and its true value.

- Begin small and stick to optimal warehouse size experimenting with query sizes and complexities so that they can be completed within 5-10 minutes. Keep an eye on the queue to ensure that sure jobs aren’t pending endlessly as this has a cascading effect on the overall workload.

- Resources and volume along with post-grouping workloads and rightsizing warehouses also must be in check. Set proactive monitors using Snowflake resource monitors to track any threshold issue.

Queries

Optimize query for operations to remain hassle-free. Executing query tags and SQL statements within a session using QUERY_TAG helps teams identify trends and issues with the data pipeline. Remember that teams must be consistent with these efforts. Snowflake also helps determine the most expensive queries run in a defined period.

Tables

- Search optimization services, table clustering, and materialized views querying large tables are efficient in both time and consumption of credits. Though default micro-partitions are naturally triggered while the order data is ingested into Snowflake, the same requires efforts to be maintained over time. Table clustering defines a cluster key that co-locates similar rows in the same micro-partitions. This can be replicated with larger tables that are queried by selecting a smaller set of rows.

- For longer queries, say those that run for more than tens of seconds potentially returning a 100k-200k value, the enterprise edition has a materialized view-a pre-computed data set stored for later use. This helps with the cost optimization strategy to run frequent or complex queries or on a subsection of large data sets. It can also be used when query results are not frequently changed.

- Disposing of unused data tables needs to be done only after considering the requirements of data engineers.