The rise of Generative Artificial Intelligence (GenAI) and Large Language Models (LLMs), such as ChatGPT has sent shockwaves across industries and businesses worldwide. We have all felt the impact. However, these disruptions have unlocked new capabilities, efficiencies, and opportunities while simultaneously upending established structures, workflows, governance, and operational norms.

Then came DeepSeek, a Chinese AI company that stunned the world in January this year by delivering a chatbot rivaling Open AI’s ChatGPT—seemingly at a fraction of the cost. As the AI competition intensifies with DeepSeek’s emergence, concerns over data security have also gained traction.

Regulators in Italy have taken action, blocking the DeepSeek app from Apple and Google’s app stores while investigating the company’s data collection practices and storage methods. Authorities in France and Ireland are also examining whether the AI chatbot poses privacy risks. Meanwhile, in the US Congress members and their staff have been advised by the House's Chief Administrative Officer to refrain from using the app. Texas has already banned DeepSeek from state-issued devices.

What Data Does AI Apps Collect?

DeepSeek’s privacy policy reveals that the service gathers an extensive range of user data, including chat and search history, device details, keystroke patterns, IP addresses, internet connection specifics, and activity from other apps.

This level of data collection is not unique to DeepSeek. Other AI platforms, such as OpenAI’s ChatGPT, Anthropic’s Claude, and Perplexity, similarly harvest substantial user data. Social media platforms like Facebook, Instagram, and X also engage in comparable data collection.

Data security remains a fundamental concern when using AI chatbots, and this is not an issue exclusive to DeepSeek. Even US-based AI firms, including OpenAI, have faced intense scrutiny and regulatory investigations in the EU over potential data privacy violations.

What Makes DeepSeek Different?

The key distinction lies in where the collected data is sent. DeepSeek transmits user data to servers in China, which raises alarms among cybersecurity experts. The primary concern is that a China-based company remains subject to the directives of an authoritarian government, potentially putting users’ sensitive data at risk.

Experts warn that the Chinese government could leverage this data to analyze user behavior, refine phishing tactics, or engage in other forms of digital manipulation. While no evidence has surfaced suggesting Chinese authorities are exploiting DeepSeek for foreign user data, it remains crucial for users to exercise caution and take proactive measures to safeguard their personal information when using AI applications.

Gemini Consulting & Services provides enterprises with security solutions to keep their data safe. Contact us to learn how to leverage AI tools without compromising data privacy.

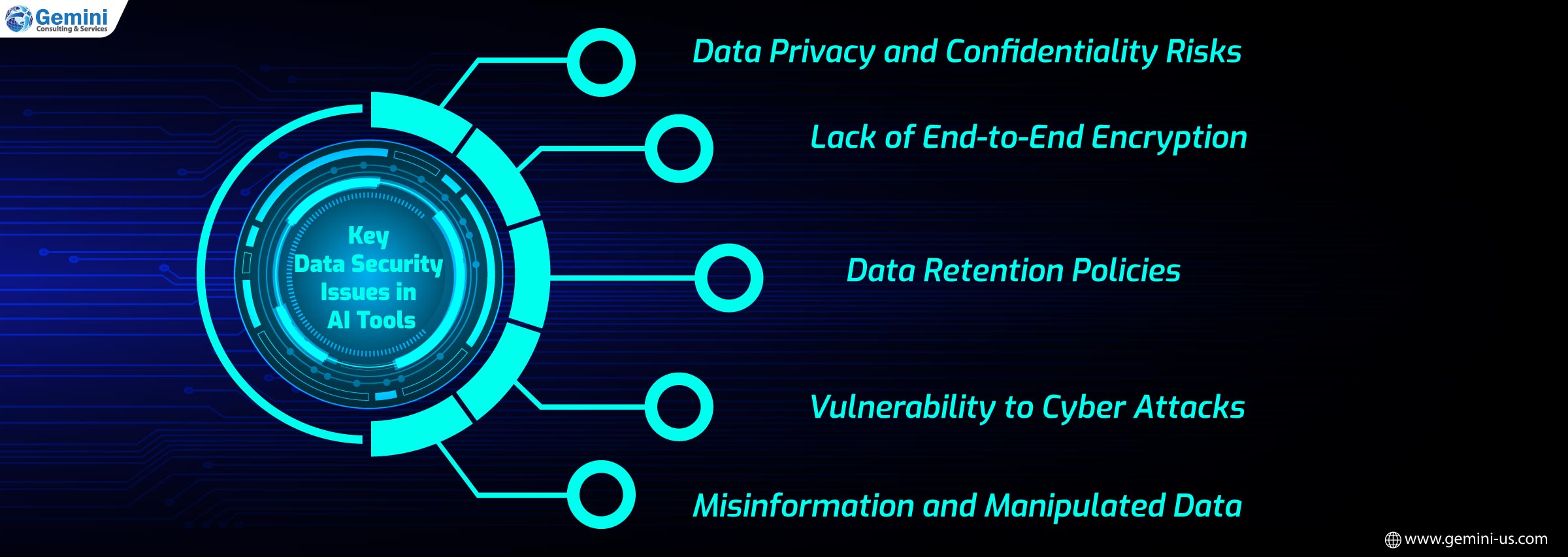

1.Data Privacy and Confidentiality Risks

AI tools operate by processing large volumes of text-based inputs, which may include sensitive personal, corporate, or financial data. When users share confidential details without realizing how the AI system stores or processes them, they risk exposing sensitive information to potential breaches or misuse.

2.Lack of End-to-End Encryption

Unlike secure communication platforms that encrypt data from sender to receiver, many AI tools do not offer end-to-end encryption. This means user input could be intercepted or stored in ways that might not be fully secure.

3. Data Retention Policies

Some AI tools retain user data to improve their algorithms or provide better responses in the future. However, if companies store user inputs for extended periods without transparency, it increases the risk of data leaks or misuse.

4.Vulnerability to Cyber Attacks

AI systems can be potential targets for cybercriminals who may attempt to exploit vulnerabilities, manipulate responses, or gain access to stored data. Any security loophole in an AI tool’s framework can expose sensitive user data to hacking attempts.

5.Misinformation and Manipulated Data

While AI-generated content is highly advanced, it is not immune to generating misleading or biased information. Users who rely on AI for critical decisions without verifying the data could fall victim to misinformation, leading to financial, reputational, or security risks.

Despite these risks, users can take proactive steps to ensure data security when engaging with AI applications.

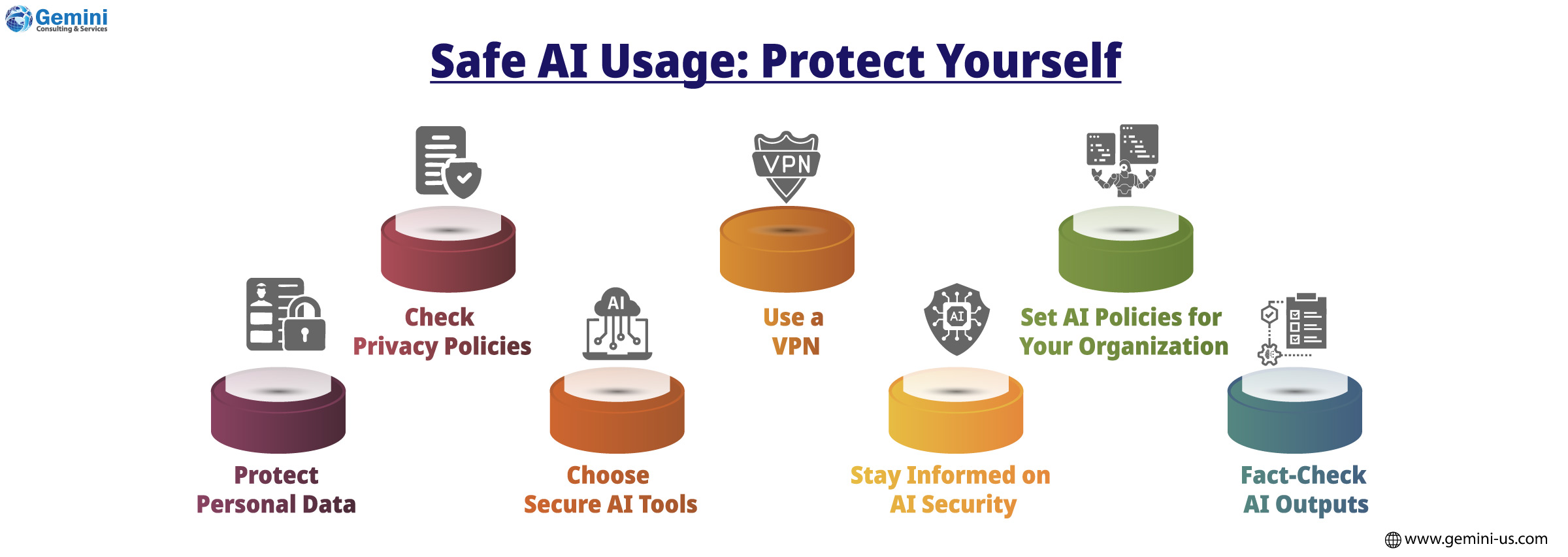

1.Avoid Sharing Sensitive Information

The most effective way to protect your data is by being mindful of what you input into AI tools. Avoid sharing personal identifiers such as full names, financial details, passwords, or proprietary business information. If using AI for professional purposes, anonymize data whenever possible.

2.Review Privacy Policies

Before using an AI tool, review its privacy policy and data retention practices. Check whether the platform stores user inputs, how long they retain data, and if they share information with third parties. Opt for tools that prioritize user privacy and provide transparency in data handling.

3.Use AI Tools with Strong Security Features

Choose AI platforms that implement robust security measures, such as encryption and two-factor authentication. If an AI tool offers additional privacy settings, enable them to enhance your data protection.

4.Use a VPN for Additional Security

A Virtual Private Network (VPN) can add an extra layer of security by encrypting your internet connection, preventing third parties from intercepting your data. This is especially useful when using AI tools on public or unsecured networks.

5.Stay Updated on AI Security Best Practices

AI security measures evolve constantly, and staying informed about potential risks is crucial. Follow updates from cybersecurity experts, AI developers, and technology forums to understand how to safely interact with AI tools.

6.Implement Organization-Level AI Policies

For businesses utilizing AI tools, it is essential to establish clear policies on how employees should engage with AI systems. Guidelines should include restricting sensitive data input, using vetted AI platforms, and ensuring compliance with data protection laws such as GDPR or CCPA.

7.Verify AI-Generated Information

Do not blindly trust AI-generated content, especially for critical decisions. Cross-check facts with reliable sources before acting on AI-generated insights to prevent misinformation from influencing your work or decisions.

Conclusion

AI tools like ChatGPT and DeepSeek offer powerful capabilities, but users must be mindful of data security risks when engaging with them. By understanding potential vulnerabilities and implementing best practices, individuals and businesses can safely harness AI’s benefits while minimizing security threats.

Adopting a cautious approach, such as avoiding sensitive data sharing, using secure platforms, and verifying AI outputs ensures that users remain in control of their information while leveraging AI effectively. With responsible usage, AI can be a game-changer without compromising privacy or security.